6 TDD Examples

6.1 Reasons to study

The first time I heard about TDD was at university in the course “Software Engineering”. We had to use it inside of a project immediately. At first, I did not understand the idea behind it, but I got it by using it inside of the project. Only because of that, I got how useful this process can be.

‒ Max from Mannheim, Germany 6

Before I start a project in a language I have not used for a while, I look for current recommendations from the community.

For example, once I made a tiny project in Haskell.

At that time, for managing the dependencies, builds, and publishing, one tool seemed to be the default choice. It was called “Stack”.

Four years passed, and at a DevOps conference I talked to a random man, who turned out to have considerable experience with Haskell.

It was Rok Garbas, working on reproducible dev and production environments with Flox.

He says: Stack used to be the default, but these days, Cabal (another build and package management tool for that ecosystem) has more features and is so reliable there is no real reason to use Stack anymore.

Like in this example, the recommended way changes every few years.

In Python world, it was: PIP to Pipenv, to Poetry, to anything-but-Poetry.

The last trend due to the fact that Poetry at some point had reoccurring problems that made it unreasonable to use it for anything more serious than experimentation.

That trend was justified for a number of years, and faded only

recently with stability improvements to Poetry, and with added support for

installing projects from pyproject.toml by PIP itself.

PIP support meant: if Poetry errors prevented the package from installing, it was now reasonably simple to install that package using PIP. If you used a VirtualEnv as the install target, the result is similar to installing that package with Poetry, only without the automation of these two steps.

All this dynamic nature of developer tooling means every time you need to test several approaches before you decide which of the most convenient tools available is stable enough that you can trust it with the hours, days and weeks of your life.

Once you decide on the tool, you need to make one more decision: which test tooling will you use?

I have some experience in both of these areas, meaning - I have made painful mistakes in each of them.

Hopefully with this book, you will have some reasonable defaults to start with for both project management and test tooling.

Someone will be worried: If I pick the tool you recommend and I find it is not suited to my needs, will I not be in much more trouble than I would be had I given it more research instead of relying on someone’s recommendation?

My answer is: if you conduct some research, then choose the tool, you are still likely to find something seemingly better, earlier than you would wish.

With build and dependency management, it is much more important to keep track of your dependencies at all than to pick the “right” tool from the start.

Once you find a better tool, it will be much easier to migrate your current configuration to it than it would be to create such a configuration from scratch when you “finally found the perfect tool”.

It is very similar with tests.

Once you find a tool you like better than the recommended one, you are always free to migrate your existing checks to it.

The most difficult part of the work - figuring out, what to test and how to test it - will already have been done.

My experience is still fresh from migrating tests from UnitTest style to PyTest style. It proved to be so simple it coud even be automated for the most part. Had I decided to wait with writing the tests until PyTest became more standard, I would now have a lot of tests to write from scratch.

And a lot of untested (and un-testable) code.

I invite you to start with one of the presented examples, and feel free to move on when the time comes.

6.2 Assembly

If you’ve been dreaming of writing some Assembly just like I did, here is an occasion to have some fun!

Unit testing is especially valuable with Assembly code. It makes sure your routines get meaningful names, and an example of calling each one is always on hand.

How is testing typically done on functions in Assembly?

Although there are people test driving their Assembly, I could not find real unit testing support. The official way to go is to use C/C++ unit testing frameworks and write tests against the global functions in the generated OBJ files.

– Peter Kofler 7

Peter Kofler tests assembly directly from assembly in one example 8.

Michael Baker from 8th Light calls his functions from C 9.

The most practical, and terse, way for us is described here: 10.

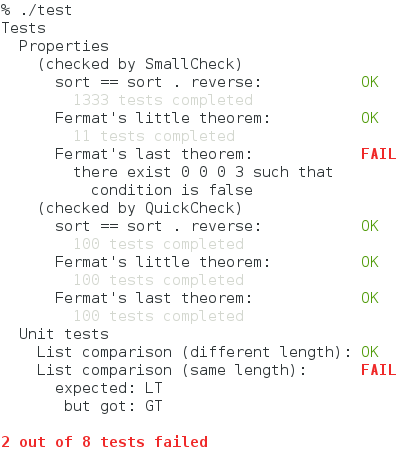

6.3 Haskell: test output as a tree

In this exercise, we will be using Tasty 11 - a Haskell module for combining various types of tests into a single output stream.

Preview:

There is exceptional clarity in test output presented in the form of a tree.

One gain from that is: we avoid the necessity to repeat ourselves when we can say:

- Properties

- Fermat’s little theorem: OK/FAIL

- Fermat’s last theorem: OK/FAIL

Instead of being left with the choice of either repeating ourselves like this when defining the test methods:

test_property_fermats_little_theorem,test_property_fermats_last_theorem;

or risking loss of context information when suddenly a test fails and we

read: “Test failed: fermats_little_theorem”. In such a case, we may

feel confused, eager to ask the question: “What was it about Fermat’s

little theorem that failed in this test?”.

6.4 Introduction: Installing Nix

With Nix, you will be able to define all you need for your project, including system-level dependencies like gcc, in a single file.

That file could be default.nix, shell.nix, or flake.nix.

With Nix and that file, you will be able to deploy a fully functional environment for development or deployment of your application.

The original (basic) Nix installer crashes against Fedora (thanks, SELinux), and gets blocked by security profiles on MacOS (creating/updating users and groups).

The alternative installer from a group called Determinate Systems is said to solve the problem at least for Fedora.

It includes some enhancements with respect to the stock installer.

Hence, we will use the Determinate Systems installer for our work.

curl --proto '=https' --tlsv1.2 -sSf -L https://install.determinate.systems/nix | \

sh -s -- install6.5 Codility: How TDD can help you get a job

Codility is an online platform for running coding tests.

As a developer looking for work, I met Codility when trying to join a global network of vetted professionals.

One of the interview stages was a Codility test.

The network said it only accepted the “top 3%” of all their candidates.

To recruiters, Codility offers a streamlined screening process optimized to minimize your interaction with the candidates, keeping the process rigorous and result-oriented.

An example of a Codility question is:

Find longest sequence of zeros in binary representation of an integer.

The platform lets you code inside their specialized environment.

Codility challenges require you to design and implement an algorithm to solve a specific problem.

You can use a range of popular languages, including Java, Python, and JavaScript.

Of all the languages I have tried (C++, Java, JavaScript, Python), I observed the most efficiency when I used Python.

Unless your test supervisor (eg. prospective employer) requires you pick a specific language, I would advise you to pick Python too if you know it on a comfortable level.

Codility exercises are not so much about knowing a language, as about being able to solve problems in code, in the language of your choice. At least as long as you choose from their list of available languages.

In the challenges, if you pick C++, you don’t get the “speed bonus” you would normally expect when implementing things in C++.

However, you get all the complexity C++ has compared to Python.

This way it makes sense to pick the least complicated language you know.

It would be even better if it was also the most flexible language you know.

Python offers you a wealth of packages and available answers on QA sites.

In Python, you can do TDD conveniently with DocTest, which can be used both in Codility, and locally.

To make use of TDD in Codility, import the following function from the

doctest module:

from doctest import run_docstring_examplesWith this function, you will be able to specify an example in the form of a docstring, and then run that example to check if its output is what you specified.

from doctest import run_docstring_examples

def get_square(x):

"""

>>> get_square(2)

4

"""

pass

def solution(x):

run_docstring_examples(get_square, globals())

return 0The output from every run of this code in Codility is likely an error from

outside of the run_docstring_examples function.

Why is that? Checking the output, we see that it is different from what Codility expected for the example input (bundled with the task).

To make sure this error doesn’t clutter the output, we need to return the right

value from the solution function.

6.6 C: Keep an IP address up to date

There is a home server that resides under an IP address that is known to change every time the home router is rebooted.

It has never been updated as long as the home router remained online.

The server has a DNS alias, an entry of class “A”.

The entry maps a friendly subdomain to the server. Let’s imagine that domain is cloud.tddexamples.com.

6.8 LaTeX: Testing your package

\ASSERTand\ASSERTSTRAsserts if the full expansion of the two required arguments are the same: the\ASSERTfunction is token-based, the\ASSERTSTRworks on a string basis.

‒ l3build

It may often be difficult to use TDD with l3build. These tests are designed with regression testing in mind.

An alternative would be a simple assert.sty library like this one:

assert.sty.

6.9 Python: Upload JPG files back to camera after modification

I have a problem. I like to keep all my photos from the current quarter on my camera (Sony A6000).

I treat that camera as the main store for photos during the season.

I do save them to my computer too during each quarter. That copy, however, I treat as a backup, not the main photo store.

The main object in some of my photos is significantly darker than I would like.

That lets my camera capture some of the brightest colors in the composition too (like clouds in the sky).

That said, I’m not ready to stick to “Point mode” for light measurement, with the measurement point located in the dark area.

I am able to adjust the brightness of the darker pictures. I use the accompanying NEF (RAW) files to accomplish that.

After adjusting the photos, I save them as JPEG. This is the format I find them in on the camera card (SD).

However, when I save the edited pictures back to JPEG and copy them to my camera, previewing them on the camera does not work. The camera says it cannot read the picture.

Make sure the picture files are saved in the JPEG format and conform to the Design rule for Camera File system (DCF) standards. If you used software like Photoshop® to make changes to the images, make sure the images are saved as Baseline Standard.

– https://www.sony.com/electronics/support/articles/00014257

I’ve tried some scripts that claimed to make a JPEG file DCF-compatible.

This was one of these scripts:

#!/usr/bin/env bash

# Requires:

#

# - ExifTool

# - ImageMagick

INPUT=TEST.JPG

OUTPUT=A0000001.JPG # DCF compatible

THUMBNAIL=THUMB.JPG

ORIGINAL=R0000349.JPG # taken with the camera

convert -sampling-factor 4:2:2 $INPUT $OUTPUT

exiftool -TagsFromFile $ORIGINAL -all:all -unsafe -XML:All -JFIF:ALL= $OUTPUT

convert $INPUT -resize 160x120 -background black -gravity center -extent 160x120 -sampling-factor 4:2:2 $THUMBNAIL

exiftool "-ThumbnailImage<=$THUMBNAIL" $OUTPUT

ORIENTATION=1 # 1 = 0°, 3 = 180°, 6 = 90°, 8 = -90°

exiftool -Orientation=$ORIENTATION -n $OUTPUTSource: https://photo.stackexchange.com/a/119164

The problem with all these recipes was: every time I’ve processed a JPEG file, I would have to copy the file to my camera, then turn the camera on and initiate a “database recovery”. Pictures copied to the card were not visible unless I did this additional step.

Every try up until now resulted in the error mentioned above - cannot read the image.

Since that testing process is tedious, it would be very convenient to do it on my computer in full instead of copying the files.

I found one idea here:

#!/bin/sh

find . -type f \

\( -iname "*.jpg" \

-o -iname "*.jpeg" \) \

-exec jpeginfo -c {} \; | \

grep -E "WARNING|ERROR" | \

cut -d " " -f 1Another one from the same place:

djpeg -fast -grayscale -onepass file.jpg > /dev/nullIf it returns an error code, the file has a problem. If not, it’s good.

Some additional info here:

Adding -a -G1 shows additional JFIF tags, which are only in the image generated by ImageMagick.

– https://exiftool.org/forum/index.php?topic=11487.0

DCF requirements summary:

The root folder name is DCIM (all capitals).

Within the DCIM folder are “roll” folders whose names start with a number 100 to 999 and are followed by exactly 5 (no less, no more) capital letters and/or digits.

Within each roll folder are image files, with names that start with exactly 4 (no more, no less) capital letters and/or digits, followed by a 4-digit number from 0001 to 9999, followed by the suffix “.JPG” (all capitals).

The image files must be sRGB JPEG. (DCF 2.0 relaxes this to permit Adobe RGB JPEGs, but I’ll guarantee that your TV can’t handle them.)

– https://www.dpreview.com/forums/thread/2127140

To enable quick checking if the JPG file may be valid, let’s create a simple script similar to one above.

You will find that script in rc/scripts/bash/check_jpg.

Make sure the script has execution permissions set:

$ chmod +x rc/scripts/bash/check_jpgThat script relies on a command named jpeginfo.

In my case, my operating system lacked that:

$ rc/scripts/bash/check_jpg ~/Downloads/original.jpg

rc/scripts/bash/check_jpg: ligne 5: jpeginfo : command not foundIn Fedora, this package could be installed as follows.

First, let’s check if a package under a similar name is available.

$ sudo dnf search jpeginfo

(...)

jpeginfo.x86_64 : Error-check and generate informative listings from JPEG filesThe listing shows there is a package under this name. Let’s install it with

sudo dnf install jpeginfo.

Once installed, let’s run the tester script to check the original JPG file:

$ rc/scripts/bash/check_jpg original.jpg

$ echo $?

0The script exited without errors. Let’s check the modified file.

$ rc/scripts/bash/check_jpg edited.jpg

$ echo $?

0No errors.

With just jpeginfo:

$ jpeginfo -c original.jpg

/home/patryk/Downloads/original.jpg 6000 x 4000 24bit N Exif,MPF 5046272 OK

$ jpeginfo -c edited.jpg

/home/patryk/Downloads/edited.jpg 6020 x 4024 24bit N Exif,ICC,XMP 600389 OKWe see that apart from the slight difference in image dimensions, there is a difference in image format (MPF vs XMP), and in the presence of ICC in the edited file.

ICC seems like additional data inside the file.

Cameras do not necessarily expect such data there, so we have a clue as to why that image may not be getting uploaded correctly.

Let’s check the other testing method that came up in our search.

$ djpeg -fast -grayscale -onepass file.jpg > /dev/nullIt turns out it is already installed on my computer:

$ which djpeg

/usr/bin/djpeg$ djpeg -fast -grayscale -onepass original.jpg > /dev/null

$ echo $?

0

$ djpeg -fast -grayscale -onepass edited.jpg > /dev/null

$ echo $?

0No errors in either case. This indicates the problem must be more subtle than presence of errors inside the file.

As you can see, TDD does not let us skip the initial step of doing our research.

You may feel we would have found the solution a long time ago by trying different ways to generate the image, and testing the images directly on the camera.

That is possible. However, with each failed attempt, we end up without a working solution, and what is worse - without any idea, what could have gone wrong.

By preparing a test first, we will ensure we will know what we will be looking for in an image. Once we generate any image, we will have a single command to check within seconds if that image is worth uploading to the camera for testing.

I see yet another script:

INPUT=TEST.JPG

OUTPUT=A0000001.JPG # DCF compatible

THUMBNAIL=THUMB.JPG

ORIGINAL=R0000349.JPG # taken with the camera

convert -sampling-factor 4:2:2 $INPUT $OUTPUT

exiftool -TagsFromFile $ORIGINAL -all:all -unsafe -XML:All -JFIF:ALL= $OUTPUT

convert $INPUT -resize 160x120 -background black -gravity center \

-extent 160x120 -sampling-factor 4:2:2 $THUMBNAIL

exiftool "-ThumbnailImage<=$THUMBNAIL" $OUTPUTSource: https://github.com/ImageMagick/ImageMagick/discussions/2349

Let’s try it out.

You can find a version with increased flexibility - for running on various

files, in rc/scripts/bash/dcf.

6.9.1 Init a project with Uv

From the very beginning, we do not want any information about our project to get lost.

If we build a wonderful package, then take it to another system for deployment or further development, we want to be sure one command will suffice to get the project ready so that we could return to work smoothly.

uv is advertised as:

- A single tool to replace pip, pip-tools, pipx, poetry, pyenv, twine, virtualenv, and more.

- 10-100x faster than pip.

– https://github.com/astral-sh/uv

uv can use the file pyproject.toml, just like Poetry.

6.10 How NOT to do TDD

Once my task became to implement a parser for a standard document format, I took the specification and started implementing that.

I did it instead of talking to the users about what they would want to have implemented.

I implemented a bunch of features.

The users were not happy with them. One feature would have sufficed. The one that was not there.

6.11 The missing ingredient of TDD

Don’t be afraid to make mistakes.

‒ “What can we learn about iterative project planning from space travel?”, Krzysztof Borcz 12

I initially got the impression that TDD is about running tests to avoid running the program as a whole.

This got me in trouble.

I wrote all the tests. I would make sure the tests passed, even in most sophisticated corner cases.

Then I connected the code to the main routine of the program, and ran the program.

The program was too slow to even load in a few minutes. And then, anyway, it crashed.

Debugging it was a nightmare. The same nightmare I hoped I could avoid by using TDD.

Finally, I watched “How Not to Land an Orbital Rocket Booster” 13.

Elon Musk, when he was designing SpaceX space ships, he would send a rocket into the air every month or few months.

He did not trust a near-perfect unit test suite to prove the rocket was going to fly. He would actually set the rocket up to start and return, and let it fly. Even if it was going to finish in pieces.

Multiple times, launches and landings failed, but every time the SpaceX team would learn something new, and come back the next time, better prepared.

I recognised this was the answer to my dilemma: write unit tests, follow the TDD cycle of red-green-refactor, and once in a while run the program end-to-end.

Doing that will make sure you detect your blind spots in time to fix them. Perhaps using TDD on the way. But don’t avoid running the program as a whole until the very end.